How to Write Screening Questions That Actually Filter Bad Participants

The "Professional Tester" Problem

You launch a study targeting "Senior Marketing Managers." You get 10 responses.

- 3 are actually college students looking for extra cash.

- 4 are random people clicking "Yes" to everything.

- 3 are legitimate.

Your data is ruined. This is the nightmare of unmoderated testing.

The defense against this? Better Screening Questions.

Here is the Feedbackerr guide to writing screeners that actually work.

1. Never Ask "Yes/No" Questions

If you ask: "Do you use email marketing software?" The bad participant thinks: "If I say yes, I get paid. YES."

Fix: Turn it into a multiple-choice selection. "Which of the following software tools have you used in the past 6 months?"

- [ ] Adobe Photoshop

- [ ] Mailchimp (Target)

- [ ] Microsoft Excel

- [ ] None of the above

If they don't select Mailchimp, they are disqualified.

2. Use "Red Herrings" (Fake Answers)

Professional testers will often select every option to maximize their chances. Catch them by including fake options.

"Which of these financial apps do you use?"

- [ ] Mint

- [ ] YNAB

- [ ] FinTrack Pro (Fake)

- [ ] QuickBooks

If they select "FinTrack Pro," disqualify them immediately.

3. Ask for Specifics (Open Text)

Multiple choice is easy to game. Open text is hard.

Instead of just asking if they are a dog owner, add a second question: "Please describe your dog (breed, age, and name)."

A real person will write: "I have a 3-year-old Golden Retriever named Max." A bot or scammer will write: "good" or "yes".

Feedbackerr's manual review tools let you quickly glance at these answers and reject low-quality submissions.

4. Don't Reveal the "Right" Answer in the Question

Bad: "We are looking for people who love coffee. Do you drink coffee?" Good: "How many cups of coffee do you consume on an average day?"

- 0

- 1-2

- 3+

5. Test for Articulation

Sometimes a participant fits the demographic but is terrible at giving feedback. They give one-word answers.

Add a question like: "Tell us about the last time you had a bad customer service experience."

If they write meaningful sentences, they will likely be a good interviewee. If they write "idk," screen them out.

Conclusion

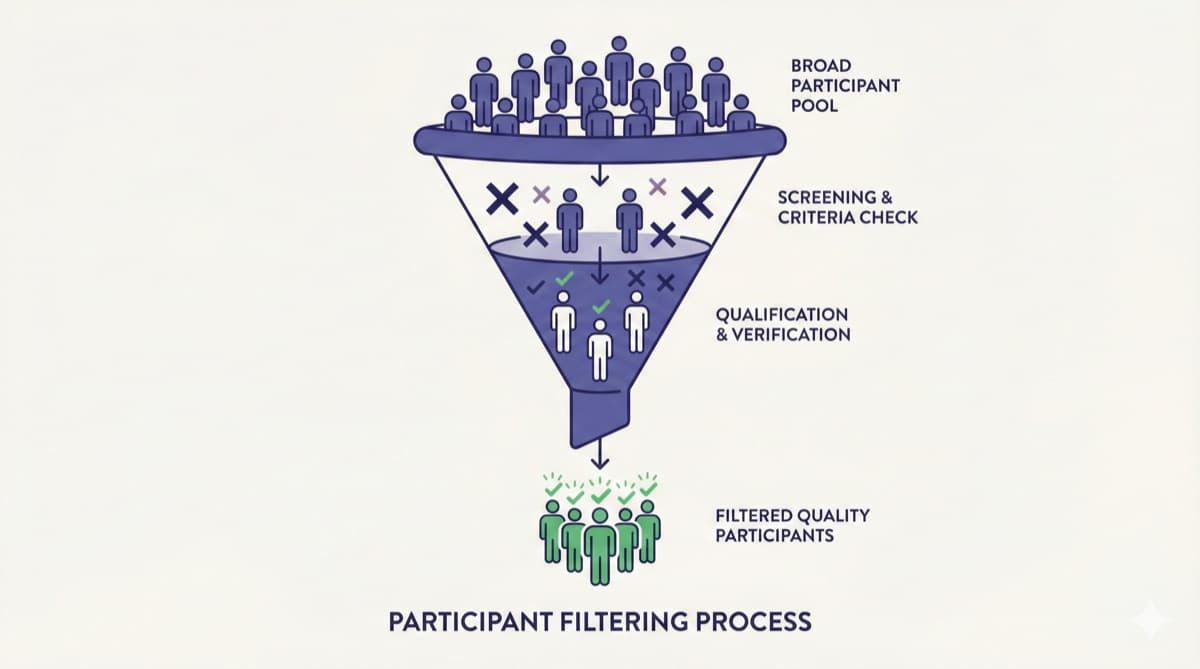

Screening is the gatekeeper of data quality. It's better to have 5 high-quality participants than 50 low-quality ones.

At Feedbackerr, we provide built-in screening tools and access to verified panels to help you find the needle in the haystack.

Ready to start automating your user research?

Join hundreds of product teams who use Feedbackerr to get actionable insights in hours, not weeks.

Related Articles

The End of Guesswork: Why User Research is Finally Accessible to Everyone

Every business owner wants to know what their customers are thinking. Historically, that cost thousands of dollars. Today, it costs peanuts. Here is how the game has changed.

Why Surveys Are Killing Your Product Strategy (And Why You Need Deep Interviews)

Surveys tell you 'what' users are doing, but only interviews tell you 'why'. Learn how AI is making deep qualitative research accessible to startups for the first time.

Why 90% of SaaS Startups Fail (And How Continuous Discovery Saves Them)

Lack of product-market fit is the #1 startup killer. Learn how continuous customer interviews can help you build what people actually want.