AI vs. Human Moderators: The Future of User Research

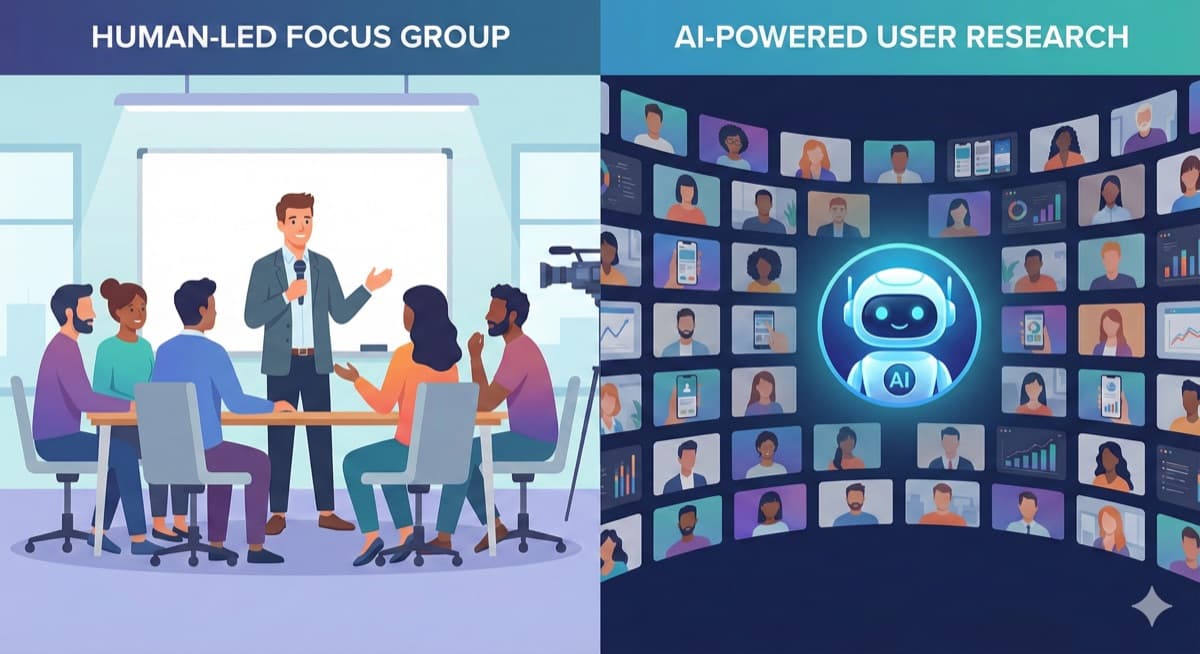

The Great Debate: Man vs. Machine

For decades, qualitative research meant one thing: a human researcher sitting across from a participant (or on a Zoom call), asking questions, probing for details, and taking notes.

But with the rise of Large Language Models (LLMs), a new contender has entered the arena: The AI Moderator.

At Feedbackerr, we believe AI is the future of scalable research. But is it right for every situation? Let's break down the pros and cons.

1. Cost and Scalability

Human Moderator:

- Cost: High. Professional researchers charge $100-$300+ per hour.

- Scalability: Low. A human can only do 1 interview at a time. Running 50 interviews takes weeks.

AI Moderator:

- Cost: Low. A fraction of the cost of a human.

- Scalability: Infinite. You can launch 100 interviews simultaneously and have them all completed in an hour.

Winner: AI Moderator (by a landslide).

2. Depth of Insight

Human Moderator:

- Humans are masters of empathy. We can read subtle facial expressions, detect hesitation, and pivot the conversation based on "gut feeling."

- Best for: Highly sensitive topics, exploring deep emotional drivers, or complex B2B workflows requiring domain expertise.

AI Moderator:

- Modern AI (like the agents used in Feedbackerr) is surprisingly good at "probing." It listens to the answer and asks relevant follow-up questions ("You mentioned the pricing was confusing. Can you tell me more about that?").

- However, it may miss non-verbal cues or sarcasm.

Winner: Human Moderator (for depth), AI Moderator (for breadth).

3. Bias and Consistency

Human Moderator:

- Humans inevitably bring bias. We might subconsciously steer the participant towards the answer we want, or get tired after the 5th interview and skip questions.

AI Moderator:

- AI is tireless and consistent. It asks the exact same core questions to every participant, ensuring your data is comparable. It doesn't get bored, hungry, or impatient.

Winner: AI Moderator.

4. Speed to Analysis

Human Moderator:

- After the interviews, the human has to re-watch recordings, transcribe notes, and synthesize findings. This "analysis paralysis" can take days or weeks.

AI Moderator:

- Feedbackerr provides instant transcripts, sentiment analysis, and summary reports the moment the interview ends. You get structured data immediately.

Winner: AI Moderator.

The Verdict

So, should you fire your research team? No.

The best product teams use a hybrid approach:

- Use AI Moderation for broad, evaluative research. Test usability, validate features, and gather feedback from large sample sizes (N=20-50).

- Use Human Moderation for early-stage generative research. Deep dive into user needs, motivations, and complex problem spaces (N=5-10).

Feedbackerr is built to handle the heavy lifting of that first bucket—giving you 80% of the value for 1% of the effort, so your team can focus on the deep strategic work.

Ready to start automating your user research?

Join hundreds of product teams who use Feedbackerr to get actionable insights in hours, not weeks.

Related Articles

The End of Guesswork: Why User Research is Finally Accessible to Everyone

Every business owner wants to know what their customers are thinking. Historically, that cost thousands of dollars. Today, it costs peanuts. Here is how the game has changed.

Why Surveys Are Killing Your Product Strategy (And Why You Need Deep Interviews)

Surveys tell you 'what' users are doing, but only interviews tell you 'why'. Learn how AI is making deep qualitative research accessible to startups for the first time.

Why 90% of SaaS Startups Fail (And How Continuous Discovery Saves Them)

Lack of product-market fit is the #1 startup killer. Learn how continuous customer interviews can help you build what people actually want.